Cloud Confusing

Explaining hosting, AWS, Wordpress, static sites, and all manner of cloud solutions.

Cache Invalidation and Amazon S3

So you have an Amazon S3 hosted site with CloudFront caching in front of it? Nice work — it’s an affordable and highly scalable solution. One downside with this is that the cache, which helps makes your site so fast and cheap to run, is designed to hold on to files, possibly serving an old version to visitors.

That’s normally not a problem (it’s literally the point of a cache), but if you are making a lot of changes to the site and you want visitors to see them as soon as possible, then you will need to invalidate the cache and tell CloudFront to serve the most recent files.

Here is how you can do that…

Basically, what’s happening in this situation is this (vastly oversimplified, but it should help):

You are uploading files to S3. You’ve told CloudFront to live in front of those files (“at the edge”), so when a user requests those files they are served from CloudFront. Good news: this is fast and cheap. Bad news: when you upload a new version of the file to S3, the cached version at CloudFront is not killed off. This means CloudFront might be serving an old version of the file for some time.

Here is the key thing to understand about working with CloudFront (from AWS docs):

CloudFront distributes files … when the files are requested, not when you put new or updated files in your origin. If you update an existing file in your origin with a newer version that has the same name, an edge location won’t get that new version [until] …

The old version of the file in the cache expires.

There’s a user request for the file at that edge location.

What this means is that your files are cached until they are not. CloudFront will respect timestamps and S3’s latest version of a file, but it won’t actively seek them just because you happened to upload a new version. This means we need to use the tools we have — mainly filename versions and CloudFront TTL — in order to make sure the right files are available.

Filename Versioning

If the above scenario bothers you or doesn’t work for your business then you need to find a solution. That might be as simple as changing a file name or versioning your file names. For example: if you are working on script.js and want to upload a new version to your production site you might change the name of the file to script_01.js and then to script_02.js. You’ll also need to update any links or src tags to that file as well, obviously. This doesn’t invalidate the cache, it circumvents the cached version altogether.

Obviously this solution won’t work well for something like an an index.html file, where changing the name isn’t practical.

This might sound like a pain, but shortcuts like gulp-rev make it very easy to do.

Cache-Control Headers

All browsers have a built-in cache. This is so you don’t need to fetch the same jQuery file a thousand times a day. Each file can define its own cache handling with something known as the cache-control HTTP header. The important ones here are max-age and expire, which control the life of a cached file.

For example you might specify something like cache-control: max-age=2628000 which means after one month don’t cache this file, rather go to the server and fetch a fresh version, possibly with changes. Keep in mind that this number operates in seconds, not milliseconds.

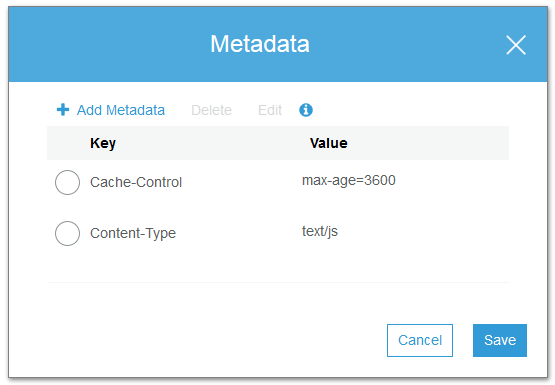

Using the S3 interface it’s easy to control cache headers, you just need to go into your bucket, find the file you are concerned with, go to Properties > Metadata and then set what you want. The “key” will be Cache-Control and the value will be the expiry time in seconds.

Unless you are going to use a third-party tool or the AWS SDK, the setting for each file needs to be done manually. You can’t set a cache policy at the bucket level.

Of course, this will only affect a local browser cache, it won’t do anything to affect CloudFront (or any other CDN caching) that might be happening!

Setting a TTL in CloudFront

Cache-control will handle how long files live in your browser cache, but what about how long they live in the CloudFront cache? Now we need to look at the “TTL.” In technical terms we are concerned with the s-maxage now, which is how you set the maximum age of a shared cache. In the CloudFront dashboard you can control how long files stay cached at the edge. You’ll have to do this using specific file paths, wildcards, and/or filetypes (as in /path/*.js) in order to control which files are affected.

To do this: Go to CloudFront > your distribution > Cache Behavior Settings and then move Object Caching to customize. Now you can change the TTL (time to live). Specifically, you can control the Minimum, Maximum, and Default caching time of files in CloudFront. And change is an operative word here, CloudFront generally passes through cache directives, as opposed to setting them.

You’ll have to read the “I” tooltips carefully because the settings here work in coordination with cache-control headings from the origin (you know, S3). For example, the Default TTL setting says (emphasis added):

The default amount of time, in seconds, that you want objects to stay in CloudFront caches before CloudFront forwards another request to your origin to determine whether the object has been updated. The value that you specify applies only when your origin does not add HTTP headers such as Cache-Control max-age, Cache-Control s-maxage, and Expires to objects.

So basically, if the origin doesn’t have cache-control max-age or expire settings, default TTL will add them, where the Maximum and Minimum TTL setting will override cache-control settings.

That’s a long way of saying you can use CloudFront to deal with your caching problems, but it’s more of an override than a great way to work with specific files.

Kill My Cache Now! (Manual Cache Invalidation)

It’s also worth noting that you can do manual, one-time invalidations against a file, wildcard, or directory path using the Invalidations tab in your CloudFront Distribution’s settings. Amazon warns against the costs of doing this as opposed to using the other options mentioned, but it works in a pinch.

Keep in mind one important note from the AWS instructions:

By default, each object automatically expires after 24 hours.

Kind of an important thing to point out right? So if you simply can’t figure any of this out, everything will be better by this time tomorrow!

Sal August 6th, 2018

Posted In: AWS

Tags: Amazon S3, Cache Invalidation, CloudFront, TTL